Black Box Problem in AI explained

Published: 1 Feb 2026

artificial intelligence (AI) is increasingly prevalent across industries like healthcare, finance, and consumer products. However, a significant challenge is the Black Box Problem, which refers to the lack of transparency in AI systems, especially those using deep learning models. This unexplainable algorithmic outcomes pose challenges to trust, accountability, and ethics.

The Black Box Problem stems from the difficulty in understanding how AI models, particularly deep learning models and undecipherable neural networks, arrive at decisions. This complexity makes it hard to discern the rationale behind specific conclusions. Addressing this interpretability bottleneck is crucial for ensuring AI systems are reliable and fair.

The main benefits of addressing the Black Box Problem include increased trust and reliability in AI systems, clear accountability for AI decisions, the ability to identify and mitigate bias, and compliance with regulatory and ethical standards.

The main uses of addressing the Black Box Problem are in critical applications like medical diagnostics, autonomous driving, and criminal justice, where understanding AI decision-making is essential.

The main parts involved in addressing the Black Box Problem include Explainable AI (XAI) techniques, visualization tools, improved model design, and comprehensive transparency and documentation practices. By focusing on these areas, we can work towards more interpretable and accountable AI systems.

Table of Contents

What is the Black Box Problem?

The Black Box Problem in AI refers to the difficulty in understanding and interpreting the internal workings of ai models, particularly deep learning models. These models, often composed of complex neural networks, learn patterns from vast amounts of data to make predictions or decisions. However, their intricate nature makes it challenging to unveil predictive rationale – how they arrive at specific conclusions remains opaque. This input-output entanglement makes it hard to trace the decision lineage obscurity and understand the reasoning behind the AI’s output. Addressing the Black Box problem meaning is to shed light on this obscurity.

How Deep Learning Models Work

To understand why the Black Box Problem is prominent in deep learning models, it’s essential to grasp how these models function:

- Layers and Nodes: Deep learning models are built from layers of nodes (neurons), where each neuron applies a mathematical function to its inputs. These layers can be hundreds deep, with each layer transforming the data in complex ways.

- Weights and Biases: The neurons in these layers have associated weights and biases that are adjusted during training. The learning process involves modifying these weights and biases to minimize error in predictions. This results in highly optimized models, but the transformation of data through multiple layers becomes less transparent.

- Activation Functions: Neurons apply activation functions to determine whether they should be activated. These functions introduce non-linearity into the model, allowing it to learn complex patterns but also contributing to its opaque nature.

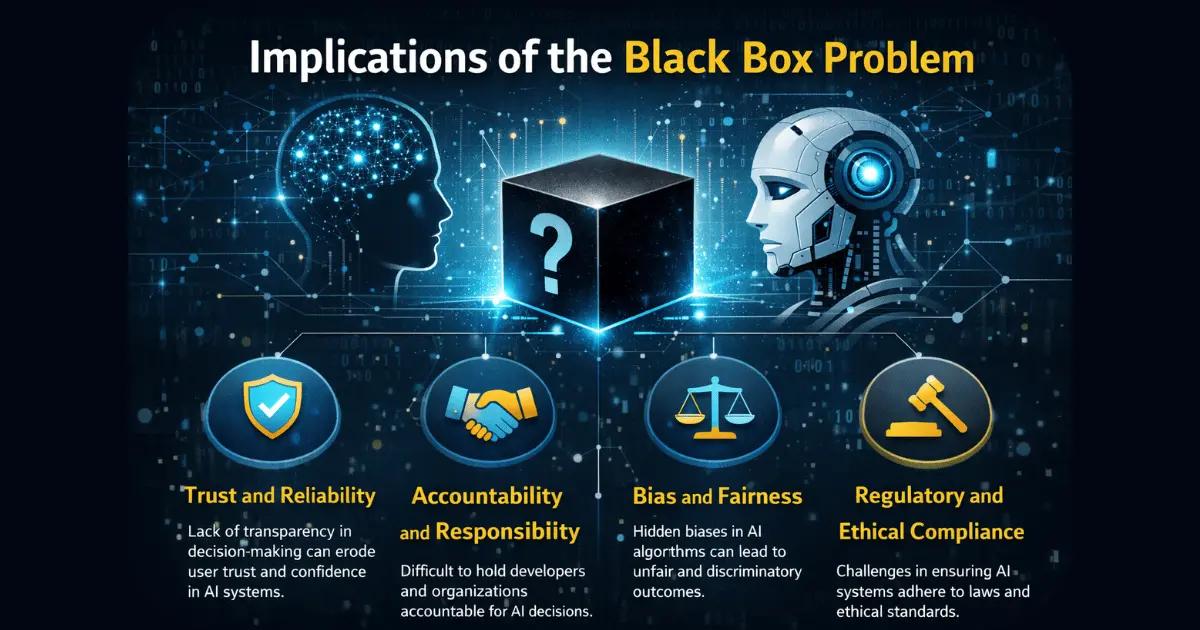

Implications of the Black Box Problem

The Black Box Problem has significant implications across various dimensions:

1. Trust and Reliability

Trust is fundamental when deploying AI systems in critical applications such as healthcare, finance, or autonomous driving. If users and stakeholders cannot understand how decisions are made, it becomes challenging to trust the AI system’s reliability. For instance, if a deep learning model used in medical diagnostics makes a recommendation, doctors and patients need to understand the rationale behind it to make informed decisions. This trust and reliability is essential for wide scale Explainable AI adoption.

2. Accountability and Responsibility

The lack of transparency complicates assigning accountability when AI systems make errors or cause harm. For example, if an autonomous vehicle crashes, determining whether the fault lies with the vehicle’s AI system, the developers, or the operators becomes difficult. This ambiguity can lead to legal and ethical dilemmas. The algorithmic accountability vacuum needs to be filled.

3. Bias and Fairness

AI systems can inherit and perpetuate biases present in training data. When the decision-making process is opaque, it becomes challenging to identify, correct, or mitigate these biases. This is particularly concerning in areas like criminal justice, where biased AI systems can result in unfair treatment of individuals. This data-driven opacity reigns and can amplify unforeseen consequence amplification.

4. Regulatory and Ethical Compliance

Regulations often require transparency and explainability in decision-making processes. For instance, the European Union’s General Data Protection Regulation (GDPR) includes provisions for the right to explanation, where individuals have the right to know the logic behind automated decisions. The Black Box Problem poses challenges to compliance with such regulations. This highlights the transparency imperative unmet and ethical oversight deficiency.

Addressing the Black Box Problem

Several approaches and methodologies are being developed to address the Black Box Problem and make AI systems more interpretable and transparent:

1. Explainable AI (XAI)

Explainable AI (XAI) aims to develop models and techniques that offer clear explanations for their decisions. XAI approaches can be categorized into two main types:

Model-Agnostic Methods: These techniques can be applied to any AI model to provide explanations. Examples include:

LIME (Local Interpretable Model-agnostic Explanations): LIME approximates complex models with simpler, interpretable models in the vicinity of a specific prediction, providing insights into how features influence the decision.

SHAP (SHapley Additive exPlanations): SHAP values quantify the contribution of each feature to the model’s prediction, offering a detailed breakdown of how features impact the outcome.

Model-Specific Methods: These techniques are tailored to specific types of models to enhance interpretability. For instance:

Decision Trees: Decision trees are naturally interpretable, as they split data based on feature values in a tree-like structure.

Attention Mechanisms: In models like transformers, attention mechanisms can highlight which parts of the input are most relevant to the model’s decision, providing some level of interpretability.

2. Visualization Tools

Visualization tools help make sense of the complex inner workings of AI models. Some effective tools and techniques include:

- Saliency Maps: These highlight the regions of an input (e.g., an image) that most influence the model’s prediction, offering insights into what the model focuses on.

- Feature Importance Graphs: These graphs illustrate the relative importance of different features in the model’s decision-making process.

3. Improved Model Design

Research is ongoing to design models that balance complexity with interpretability. Some approaches include:

- Simpler Models: Models like linear regression and logistic regression are inherently more interpretable. While they may not capture complex patterns as effectively as deep learning models, they offer clearer insights into decision-making processes.

- Hybrid Models: Combining interpretable models with more complex ones can provide a trade-off between accuracy and explainability.

4. Transparency and Documentation

Thorough documentation of AI systems is crucial for understanding and debugging. This includes:

- Data Documentation: Recording details about the data used for training, including its sources, preprocessing steps, and potential biases.

- Model Documentation: Describing the model architecture, hyperparameters, and training procedures.

Transparency in these aspects helps stakeholders understand the context and limitations of the AI system.

Future Directions

Addressing the Black Box Problem requires a multi-faceted approach and ongoing research. Future directions include:

- Integration of XAI with Other Technologies: Combining explainability with other advanced technologies, such as causal inference limitations and symbolic reasoning, may enhance interpretability while preserving model accuracy.

- Regulatory Frameworks: Developing and implementing regulatory frameworks that mandate transparency and interpretability in AI systems can drive the adoption of explainable practices.

- Ethical Considerations: Ensuring that explainability efforts are aligned with ethical principles, such as fairness and non-discrimination, is essential for building trust in AI systems.

- User-Centric Design: Designing explainability tools and techniques that cater to the needs of end-users, including non-experts, can improve the accessibility and usefulness of explanations.

Conclusion

The Black Box Problem represents a significant challenge in the field of AI, with implications for trust, accountability, and ethical considerations. As AI systems become more integral to various aspects of society, addressing this problem is crucial for ensuring that these systems are reliable, fair, and transparent. By advancing Explainable AI (XAI) techniques, improving model design, and promoting transparency, we can work towards more interpretable and accountable AI systems. Continued research and dialogue will be key in overcoming the Black Box Problem and building AI systems that are both powerful and understandable. The post-hoc explanation challenge requires a continued effort.

Explore

To further explore the Black Box Problem in AI, consider researching Explainable AI (XAI) techniques, Interpretable Machine Learning, Model Agnostic Methods, and Transparency initiatives. Understanding Bias Detection, Fairness principles, and Accountability frameworks is also essential for developing responsible AI systems.

FAQs

What’s wrong with black box AI?

Black box AI is problematic because its decision-making process is not transparent, making results difficult to trust, explain, or regulate.

What is an example of a black box AI?

A common example of black box AI is a deep learning system used in medical diagnosis that provides predictions without explaining how it reached them.

What is one major challenge of a black box AI system?

The main challenge of a black box AI system is the inability to interpret or explain its decisions, which affects accountability and fairness.

Is ChatGPT a black box model?

Yes, ChatGPT is a black box model because its responses are generated through complex neural networks that do not provide full decision transparency.

What is an example of a black box AI?

An example of a black box AI is a deep learning model used in medical imaging that detects diseases but does not explain how it arrived at its diagnosis.

- Be Respectful

- Stay Relevant

- Stay Positive

- True Feedback

- Encourage Discussion

- Avoid Spamming

- No Fake News

- Don't Copy-Paste

- No Personal Attacks

- Be Respectful

- Stay Relevant

- Stay Positive

- True Feedback

- Encourage Discussion

- Avoid Spamming

- No Fake News

- Don't Copy-Paste

- No Personal Attacks